Fun and Games with Overlay Tunnels: Part 2: How to setup a working 3-tier Hierarchy

Recently my teaching collegues from VMware sent me this range of questions:

"Can I create a full, global mesh even using different hubs?

Gateways are not an option in this scenario.

In other words, I have:

- AMER DC with Hub Cluster

- EMEA DC with Hub Cluster

- APAC DC with Hub Cluster

And I have profiles that use dynamic E2E VPN set to use the regional hub.

Can we, in this topology, get, essentially, a full overlay mesh between Edges directly? Like, can I actually build a tunnel from, say, a Tokyo Edge to a Chicago Edge even with different hubs?

Will secondary hubs in the VPN config provide the meet-in-the-middle connectivity in order to create the E2E VPN? My understanding of the hub cluster order in the Cloud VPN config is that we simply use the first cluster, but if that is unavailable, we use the next cluster in the list."

My first assumption was:

In my opinion (static or dynamic) E2E works only when there is a single or 2 hop continuous set of permanent overlay tunnels between those 2 edge devices

So you would need a multitier hierarchy (see a vmlive named: Design Principles for Scaling a Global SD-WAN Network)

Or you create/use a separate backbone (not sd-wan) from MSP,

telco, AWS or Azure, MPLS…)

As: SD-wan is a last mile and mid mile technology

If you have a high speed, lossless, low latency, low jitter backbone, any overlay would be a disadvantage (CPU cycles on edges for encrypt/decrypt, reduced MTU size due to additional headers and tunnel packet)

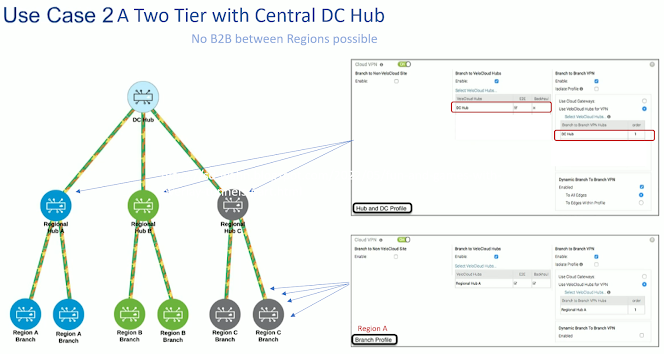

In

the mentioned VMlive presentation the presenter showed examples of

multitier hierarchy, where only Branch2Branch within the same Region is

possible.

The

original presentation also defined a full mesh of permanent tunnels

between all regional hubs. with the mentioned restriction, that there is

no way to have Branch2Branch tunnels between Branches of different

regions.

- The OFC table on the Orchestrator only shows the connected Branch networks directly learned from the corresponding Edge.

- Branch Edges do not show any non regional branch routes.

The idea was to reinstate (relearn) all branch routes on a HUB device reachable via direct or 2hop overlay by all other Branch edges, as only routes reachable via direct or 2 hop overlay are considered valid.

In the OFC you need to enable the redistribution of that OSPF external routes back to Overlay (1) and make sure that Hub routes are preferred over Router (Underlay) routes (2).

And then you see in the above OFC route table that those Branch routes are also advertised by the DC-Hub Edges.

Note:Same mechanisnm also should work with BGP

Still the DC-Hub is reachable from any edge via a 2 hop overlay

VPC-A1> trace 10.1.201.2 (VPC-DC)

trace to 10.1.201.2, 8 hops max, press Ctrl+C to stop

1 10.2.201.1 28.072 ms 1.780 ms 1.321 ms (VCE-A1)

2 100.64.112.2 84.274 ms 7.221 ms 8.106 ms OVL 1 (Hub-A2)

3 100.64.121.2 13.552 ms 10.555 ms 12.114 ms OVL 2 (Hub-DC-1)

4 10.0.201.2 27.505 ms 14.941 ms 17.847 ms (R-DC)

5 *10.1.201.2 11.181 ms (ICMP type:3, code:3, Destination port unreachable)

Now the reinstated routes are accepted in the local route table of our regional branch edges and we can reach Branches in another region via a 3-hop overlay:

trace to 10.3.202.79, 8 hops max, press Ctrl+C to stop

1 10.2.201.1 4.118 ms 1.293 ms 1.840 ms (VCE-A1)

2 100.64.112.2 348.161 ms 10.031 ms 13.470 ms OVL 1 (Hub-A2)

3 100.64.113.2 265.984 ms 39.569 ms 17.451 ms OVL 2 (Hub-B)

4 100.64.104.2 102.274 ms 54.166 ms 64.786 ms OVL 3 (VCE-B2)

5 *10.3.202.79 186.271 ms (ICMP type:3, code:3, Destination port unreachable)

So if you need redundancy you need either 3 Hubs in the DC (Vladimir´s idea) or a second set of 2 hubs in another DC (my idea).

Comments

Post a Comment